Your Patients Are Already Telling You What Prompts to Track. You’re Just Not Recording It.

How synthetic personas solve the cold-start problem for local practitioners — and why a $200 pin might be the best AEO investment a chiropractor can make.

Kevin Indig published a piece last week on synthetic personas for prompt tracking that I haven’t been able to stop thinking about. Not because of the SaaS applications — those are obvious. Because of what it implies for local practitioners.

His core argument: AI search is personalized. Every user gets different answers based on their context. You can’t track “the” AI response to a query because there isn’t one. You need to track responses per persona — and synthetic personas let you build those segments from behavioral data instead of spending weeks doing interview research.

Stanford validated the approach at 85% accuracy. Bain cut research time by 50-70%. The framework is real.

But Kevin’s examples are all B2B SaaS. Enterprise IT buyer vs. individual user. SOC 2 compliance vs. “best free app.” His data sources are CRM records, support tickets, G2 reviews, sales call transcripts.

A chiropractor doesn’t have a CRM with 10,000 contacts. They don’t have G2 reviews. They don’t have Gong recordings of sales calls.

What they have is better.

The Data Source Nobody’s Using

Every chiropractor in the country has 15-30 conversations per day with people who are in active decision-making mode about their health. Not browsing. Not comparing features in a spreadsheet. Sitting in a room, in pain, asking real questions in real language about real problems.

These conversations contain everything Kevin’s persona card framework needs:

Job-to-be-done. Not inferred from pageview data. Stated directly. “My back went out playing pickleball and my neighbor said I should try a chiropractor.” That’s the job: get out of pain, evaluate whether this thing works, decide whether to book.

Constraints. Also stated directly. “I’ve never been to a chiropractor before.” “My insurance doesn’t cover this, right?” “I need to be able to work tomorrow.” “My doctor said I shouldn’t do this.” These constraints shape how the person searched before they called — and they’re almost never captured.

Vocabulary. This is the big one. Kevin identifies vocabulary as one of five critical persona card fields because it determines how people prompt AI models. And there is an enormous gap between how patients talk and how chiropractors write.

Patients say “threw my back out.” Practice websites say “acute lumbar sprain/strain.”

Patients say “that popping thing.” Websites say “spinal manipulation.”

Patients say “is this going to hurt.” Websites say “gentle, non-invasive techniques.”

Patients say “is my chiropractor scamming me with a 3x/week plan.” Websites say nothing. Because nobody writes about that.

That vocabulary gap is why 1,041 chiropractic practice sites get cited interchangeably in AI answers. They’re all writing in clinical language that doesn’t match how patients actually phrase their questions to ChatGPT and Perplexity. The content sounds the same because it uses the same sanitized terminology — not the raw, specific, slightly anxious language real people actually use.

What Kevin’s Framework Looks Like for Chiropractic

Kevin’s persona card has five fields. Here’s what those look like when you build them from actual patient conversations instead of CRM data.

Persona 1: The Acute Pain First-Timer

Job-to-be-done: Get out of pain fast, decide if chiropractic is even legitimate

Constraints: Never been before, skeptical, in pain right now, wants fast answers, probably Googled “chiropractor near me” and then asked ChatGPT “is a chiropractor a real doctor” before calling

Success metric: Clear yes/no on whether to go and what to expect at the first visit

Decision criteria: Wants to understand the process before committing — not clinical evidence, but practical logistics. What happens? Does it hurt? How many visits?

Vocabulary: “threw my back out,” “is a chiropractor a real doctor,” “what happens at a first chiropractic visit,” “how much does it cost without insurance”

Persona 2: The Referred Skeptic

Job-to-be-done: Validate that a friend’s recommendation isn’t woo

Constraints: Has been told chiropractic works but has seen negative coverage. Needs evidence but doesn’t read journals. Trusts balanced assessments over boosterism

Success metric: Feels confident the practitioner is evidence-based and honest about limitations

Decision criteria: Red flags matter more than green flags. Looking for reasons NOT to go. Content that acknowledges downsides is more persuasive than content that doesn’t

Vocabulary: “is chiropractic evidence-based,” “chiropractor vs physical therapist,” “is cracking your back bad for you,” “chiropractic pseudoscience”

Persona 3: The Chronic Pain Researcher

Job-to-be-done: Find something that actually works after trying everything else

Constraints: Has been to PT, maybe had injections, possibly considering surgery. Has a longer decision timeline. Does more research. Compares multiple providers

Success metric: Specific explanation of how chiropractic would address their particular situation — not generic “we treat back pain” messaging

Decision criteria: Clinical reasoning. They want to understand WHY this approach, not just WHAT this approach. They’ve been disappointed before and need to understand the mechanism

Vocabulary: “nothing works for my back pain,” “chiropractor for herniated disc,” “alternatives to back surgery,” “failed physical therapy what next”

Persona 4: The Post-Accident Patient

Job-to-be-done: Determine if chiropractic is appropriate for a car accident injury and whether insurance covers it

Constraints: In pain, may have attorney involved, needs documentation for insurance/legal, time-pressured, has never navigated PI claims before

Success metric: Clear answer on coverage, timeline, and what the process looks like

Decision criteria: Specificity about PI cases. They need to know this practice handles their exact situation, not just general wellness

Vocabulary: “whiplash chiropractor,” “car accident chiropractor near me,” “does insurance pay for chiropractic after accident,” “PIP chiropractic coverage”

Each of these personas generates a completely different set of AI prompts. And each gets different AI answers — because AI search is personalized based on the context signals embedded in how someone phrases their question.

If you’re only tracking “best chiropractor near me” and “chiropractic for back pain,” you’re missing three-quarters of the prompt surface.

The Cold-Start Problem Kevin Identifies — And How Practitioners Solve It

The most actionable insight in Kevin’s piece is about the cold-start problem: you can’t wait to accumulate 6 months of real prompt volume before you start optimizing. Synthetic personas let you simulate prompt behavior immediately.

For SaaS companies, this means building personas from CRM data and support tickets while waiting for prompt tracking tools to accumulate signal.

For a chiropractor, the cold-start solution is even faster — because the data isn’t sitting in a CRM. It’s happening in real time, every day, in the treatment room.

This is where hardware becomes strategy.

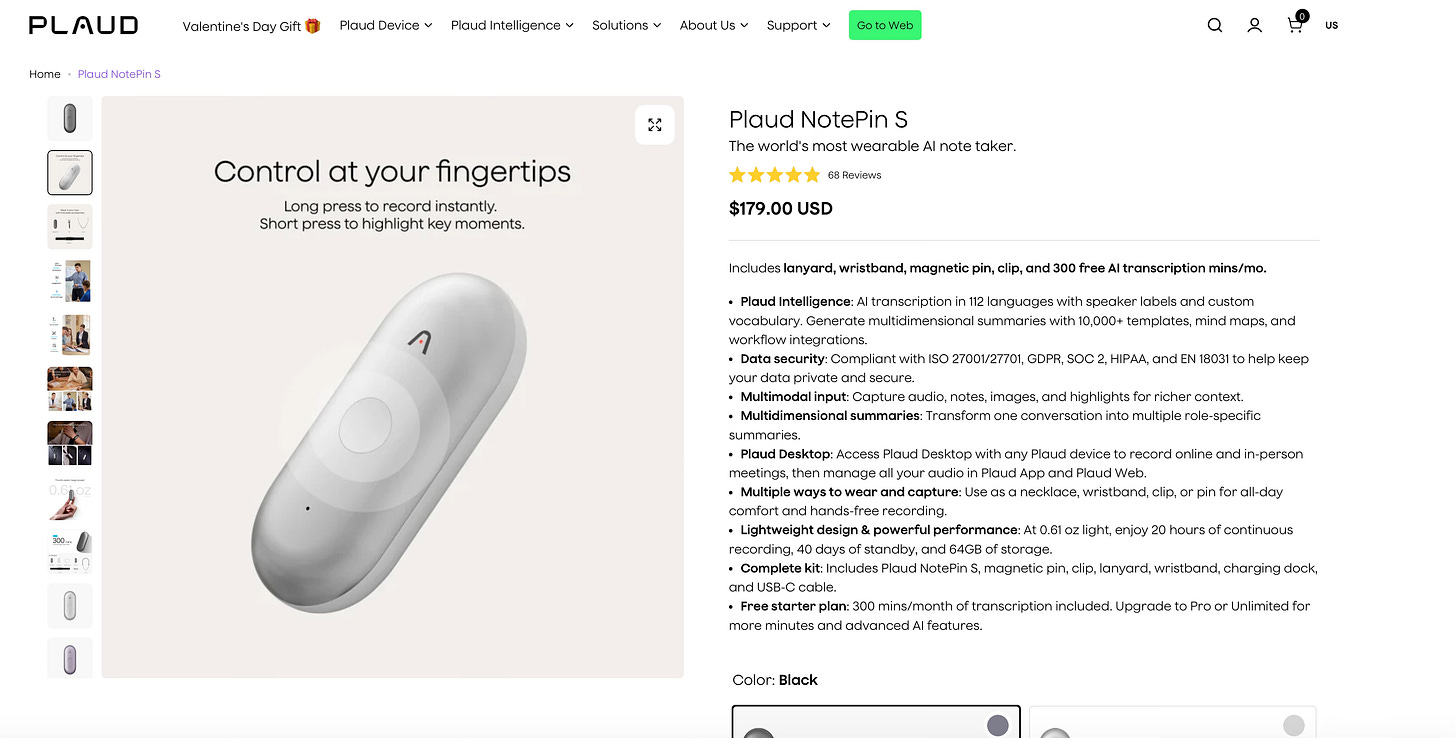

A wearable recording device like the Plaud Note — a pin or card that passively records and auto-transcribes — changes the entire equation. The chiropractor doesn’t have to “do research.” They don’t have to write down what patients ask. They don’t have to remember to log conversations. They just wear the pin and see patients.

After 30 days, they have 400-600 transcribed patient interactions. Stripped of PHI (names, dates of birth, specific health details), those transcripts become the richest possible input for synthetic persona construction. Every question a patient asked. Every concern they voiced. Every term they used. Every moment of hesitation or confusion.

That’s not CRM data with 3 data points per contact. That’s the full texture of how real people think about, talk about, and make decisions about chiropractic care.

The front desk phone line is arguably even more valuable. Patients call when they’re in decision mode — they’ve already asked ChatGPT, they’ve already read the Google reviews, and now they’re calling to close the gap between what AI told them and what they still need to know. The questions they ask in that 3-minute call map almost directly to the prompts they typed 10 minutes earlier.

Record and transcribe those calls (with proper consent and HIPAA-compliant tools), and you’re capturing the exact language bridge between AI prompts and booking decisions.

Where This Connects to the Content Flywheel

Here’s what I keep coming back to.

Dave Gerhardt laid out the Brand Flywheel in a recent conversation with AirOps (video) that maps directly onto this. His core argument: use conversations as your idea-testing engine, then repurpose by going deeper not wider. Don’t copy-paste the same content across formats — take one strong conversation and build a better second-draft asset with added research, context, and point of view. And the whole thing only works with systems and measurement protecting the momentum.

He’s talking about B2B marketing teams. But the principle is exactly the same for a chiropractor with a Plaud pin: patient conversations are the raw signal. The flywheel turns that signal into compounding content. The personas tell you which signals to prioritize.

In the video flywheel piece, I laid out how a real estate agent turns one filming session into six structured assets — YouTube video, blog post, FAQ schema, email capture, cross-links, video chain. The content flywheel solves the production problem. Gerhardt’s framework solves the distribution problem — fewer channels, more commitment, ruthless measurement of what actually resonates.

Synthetic personas solve the targeting problem.

Without personas, a chiropractor filming videos is guessing which topics matter most and which language to use. “I’ll do a video on sciatica” is a start, but it misses the question of whose sciatica question you’re answering and how they’d phrase it.

With personas built from actual patient recordings, the content calendar writes itself:

The Acute Pain First-Timer generates videos like: “What happens at your first chiropractic visit — everything you need to know before you go.” Filmed in the actual clinic, walking through the actual process, using the actual words patients use when they’re nervous about their first visit.

The Referred Skeptic generates: “Is chiropractic evidence-based? Here’s what the research actually says — and where it’s still uncertain.” The honest assessment content that, per the chiro study, nobody in the field has the courage to publish.

The Chronic Pain Researcher generates: “I’ve tried everything for my back pain. When I’d recommend chiropractic — and when I wouldn’t.” Clinical reasoning content that bridges the three-layer trust hierarchy from the study.

The Post-Accident Patient generates: “Car accident and considering a chiropractor? Here’s exactly how PIP coverage works and what to expect.”

Each video runs through the same pipeline — transcript to blog post, FAQ schema, condition page embed, YouTube description with structured data. But now each piece of content is targeted at a specific persona, using vocabulary extracted from actual patient conversations, answering the exact questions that persona asks AI models.

And then you track citation performance per persona. Not “are we showing up for chiropractic queries” but “are we showing up when the Referred Skeptic asks whether chiropractic is evidence-based? Are we showing up when the Post-Accident Patient asks about PIP coverage?”

That’s a measurable feedback loop. You can see which personas you’re winning and which ones you’re invisible to. You can prioritize content for the gaps. You can measure lift after publishing.

The Compounding Loop

Here’s the thing that makes this more than a content strategy. The recordings don’t stop after 30 days. New patients keep coming. New questions keep surfacing. The personas keep refining.

Month 1: Record conversations, build initial personas, generate first prompt list, identify content gaps.

Month 3: New patient conversations reveal questions the initial personas missed. The Chronic Pain Researcher is asking about “dry needling vs. chiropractic” — a prompt cluster you weren’t tracking. Refine the persona. Film a video. Track the citation lift.

Month 6: You notice that post-accident patients are increasingly mentioning Perplexity by name — “I asked Perplexity and it said to see a chiropractor within 72 hours.” That’s a signal that AI-sourced patients are arriving with pre-formed expectations you need to match or correct. Update the persona card. Adjust the content.

This is Kevin’s “regeneration triggers” concept applied to a clinical setting. The personas aren’t static documentation. They’re living models that evolve as patient conversations reveal new patterns.

And every conversation simultaneously feeds three systems: persona refinement, content creation, and measurement. The chiropractor doesn’t have to think about AEO strategy. They just see patients. The system extracts the strategy from the conversations.

What This Actually Looks Like Operationally

I want to be concrete about what a practice would actually need:

Hardware: A Plaud Note pin ($170-250) for the chiropractor, plus call transcription on the front desk line (OpenPhone, or whatever the practice management system supports). Total cost: under $300.

Processing: De-identify transcripts (strip names, DOBs, specific health details). Run through persona card construction using the 5-field framework. Cross-reference patient vocabulary against known AI prompt patterns. This is the service layer — where the expertise lives.

Output: 4-6 synthetic personas with prompt lists per persona. Content calendar mapped to persona gaps. Citation tracking per persona per AI model. Monthly refinement cycle.

What the chiropractor does differently: Wears a pin. That’s it. Maybe reviews a monthly report showing which patient personas they’re visible to in AI search and which ones they’re not. Everything else — the persona construction, the prompt tracking, the content targeting, the measurement — happens behind the scenes.

The barrier to entry isn’t budget. It’s not technical. It’s the same barrier I identified in the original chiro study and the video flywheel piece: the willingness to be recorded, to be transparent, to let your actual clinical conversations become the foundation of your content strategy.

Which, when you think about it, is just another version of professional courage.

Kevin’s Warning Applies Here Too

One thing Kevin gets right that I want to echo: synthetic personas are a filter tool, not a decision tool. They narrow your option set. They simulate prompt behavior. They do not replace actual patient feedback.

The sycophancy bias he flags is real — AI-generated personas are overly positive and overly rational. A synthetic persona will say “I would research three chiropractors before booking.” A real patient calls the first one that answers the phone while they’re lying on the floor. The friction and irrationality of real human behavior is something synthetic personas underweight.

But for prompt tracking — which is what we’re actually using them for — that limitation matters less. You don’t need the persona to perfectly replicate a patient’s entire decision journey. You need it to generate the prompts that patient would type into ChatGPT. And for that, Kevin’s Stanford data says 85% accuracy is achievable.

85% accuracy, from a $200 pin and 30 days of normal patient interactions, targeting a vertical where zero of 31 audited sites implement FAQ schema and Domain Rating correlates at r = 0.197 with citations.

The math is hard to argue with.

This piece builds on the chiropractic AEO study (62 queries, 2,293 citations, 1,041 practice domains) and the video content flywheel analysis. The synthetic persona framework is adapted from Kevin Indig’s Growth Memo on synthetic personas, which I’d recommend reading for the full SaaS application and the production-ready script.

If you run a practice and want to talk about what this system looks like for your vertical, I’m reachable here.