Question Mining Works Differently When the Stakes Are Somebody’s Spine

I tested it in chiropractic — where "Fear" doesn't mean wasted budget, it means potential paralysis. Here's what changes, what stays, and why the moat is shifting

Josh Grant just published The Definitive Guide to Question Mining and it’s the most complete operating system for AEO I’ve read. The Answer Ownership System, the four intent buckets (Evaluation, Fear, Outcome, Process), the emphasis on uncertainty over volume, the Reddit mining methodology, the sales call → canonical answer pipeline. All of it is sharp.

But his examples are SaaS. Enterprise IT buyers. Headless CMS evaluations. Pricing objections on sales calls. G2 reviews where “regret” means “this tool was more complex than I expected.”

I’ve spent the last month running a study that applies AEO frameworks to chiropractic care — 98 queries across three AI models, 25 practices, structural audits, correlation matrices, the works. And when I tried to fit the question mining framework onto healthcare, three things happened: two of his core principles validated perfectly, one required a fundamental adaptation, and a new insight about the future of AEO moats fell out.

What Validates Perfectly

“Questions expose uncertainty. Uncertainty is where decisions happen.”

This is the single most important sentence in Grant’s piece, and the chiro data confirms it at a level that even surprised me.

In the study, I built a 178-question patient corpus from Reddit, PAA boxes, Perplexity suggestions, and chiropractic podcast Q&As. These aren’t keyword tool outputs. They’re the actual questions real patients ask, in real language, when they’re anxious and trying to decide whether to book an appointment.

When I clustered those 178 questions by intent, the distribution wasn’t even close to what I expected. The largest single category — bigger than Evaluation, bigger than Outcome, bigger than Process — was Fear.

58 questions. 33% of the entire corpus.

“Is chiropractic safe during pregnancy?” “Is it normal to feel worse after an adjustment?” “Is my chiropractor scamming me?” “What’s the real risk of stroke from a neck adjustment?”

Grant would call these “quiet fear questions” — the ones that “often surface the real blocker.” In healthcare, there’s nothing quiet about them. But the principle is identical: the questions that matter most for decision-making are the ones that express uncertainty, not curiosity.

And the competitive landscape confirmed Grant’s prediction perfectly: when you identify the uncertainty that shapes decisions, you almost always find that nobody is answering it clearly.

Zero of 25 practices in the study have dedicated Fear content. Nobody addresses stroke risk honestly. Nobody explains whether a three-times-a-week treatment plan is normal or a billing tactic. Nobody has a page titled “Is chiropractic pseudoscience? Here’s what the evidence actually says.”

33% of patient questions. Zero supply.

Grant calls this “the gap between what buyers thought they were buying and what they’re actually experiencing.” In healthcare, it’s the gap between what patients are terrified about and what any practice is willing to address on their website.

“Reviews are just questions asked too late.”

This one hit hard.

Grant writes that reviews are “delayed questions buyers wish they had asked earlier” and that the most valuable review content is regret — “I wish I had known,” “in hindsight,” “if you’re considering this, be aware.”

In the chiro study, I cross-referenced Google review data against AI citation frequency across 25 practices. The finding: review count correlates negatively with AI citations (r = −0.453). But review condition density — how many specific conditions are mentioned per 1,000 words of review text — correlates strongly positive (r = +0.631).

Reviews aren’t just questions asked too late. They’re information density asked too late. A review that says “Dr. Swanson helped my migraines after three visits” gives the AI model a condition, a practitioner, a timeline, and an outcome. A review that says “Great experience! Highly recommend!” gives the model nothing.

Grant’s insight applies directly: the review content that matters for AEO is the content that resolves specific uncertainty. “Great product!” means nothing. “Works well for X, but only if you do Y” means everything.

The operational implication is immediate. Stop asking patients “How was your experience?” and start asking “What brought you in?” and “What changed?” Those two prompts naturally generate the condition-specific, outcome-specific language that AI models need.

What Needs Adaptation

Grant’s four intent buckets — Evaluation, Fear, Outcome, Process — work for SaaS. They don’t fully work for healthcare.

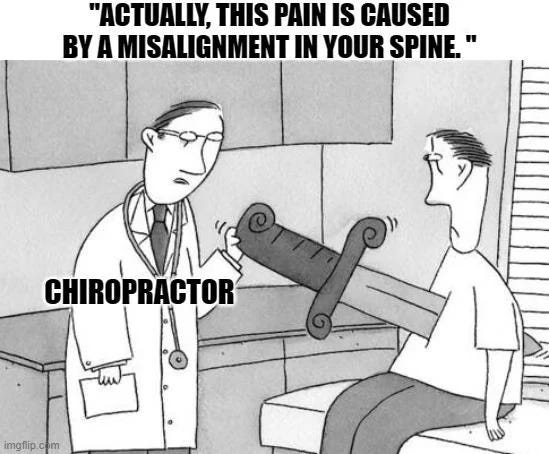

The problem is Fear.

In SaaS, Fear means: “Will this product waste my budget?” “Will this tool break my workflow?” “Am I going to look stupid for recommending this internally?”

In healthcare, Fear means: “Will this person paralyze me?”

That’s not a difference in degree. That’s a difference in kind. The consequence scale is categorically different. SaaS Fear is financial and reputational. Healthcare Fear is physical and existential.

When I tried to force the 178-question patient corpus into four buckets, Fear kept splitting into two distinct sub-categories:

Safety — physical harm concerns. Stroke risk. Nerve damage. Whether treatment will make things worse. Whether it’s safe during pregnancy. The questions where the patient is worried about their body.

Trust — manipulation concerns. Whether the treatment plan is a scam. Whether the practitioner is evidence-based or pushing pseudoscience. Whether X-rays were necessary or a billing tactic. The questions where the patient is worried about being exploited.

These feel similar on the surface. They’re operationally different. A page that addresses stroke risk with named studies and honest statistics satisfies Safety but doesn’t touch Trust. A page that explains how to evaluate whether a treatment plan is legitimate addresses Trust but doesn’t touch Safety.

And the content formats differ. Safety content needs research citations, specific risk statistics, and clear referral criteria (”if you have X symptoms, go to the ER, not a chiropractor”). Trust content needs decision frameworks, red-flag checklists, and evaluation criteria (”here are five things to look for in an evidence-based practitioner”).

Grant’s framework needs a fifth bucket for healthcare. I’d propose: Evaluation, Safety, Trust, Outcome, Process. Or keep it at four and split Fear into Safety and Trust as sub-categories. Either way, the distinction matters because the content strategy for each is completely different, and the patient questions are overwhelmingly concentrated in these two sub-categories that the current framework treats as one.

This isn’t a critique of the framework. It’s evidence that the framework generalizes with vertical-specific adaptation — which is exactly the kind of finding that makes it more useful for anyone applying question mining outside SaaS.

The Question Mining Sources Are Different Too

Grant identifies six primary question mining sources: Reddit, sales calls (Gong), customer support tickets, social media (X, LinkedIn), reviews (G2), and internal search/chat.

For a chiropractor, three of those don’t exist. There are no Gong-recorded sales calls. There’s no G2 profile. There’s no enterprise chat tool with typed queries.

But the sources that do exist are arguably richer.

Patient conversations replace sales calls — and they’re better. A SaaS sales call has one qualified buyer asking questions for 30-60 minutes. A chiropractor has 15-30 patient conversations per day, each with a person in active decision-making mode about their health. Not browsing. Not comparing features in a spreadsheet. Sitting in a room, in pain, asking real questions.

In the synthetic personas piece, I laid out how a wearable recording device like the Plaud Note captures these conversations passively. After 30 days, a practice has 400-600 transcribed patient interactions. Every question asked. Every concern voiced. Every term used. Every moment of hesitation.

That’s not CRM data with 3 fields per contact. That’s the full texture of how real people think about, talk about, and make decisions about chiropractic care.

Front desk phone calls replace chat tools — and they’re higher intent. Patients call when they’ve already asked ChatGPT, already read the Google reviews, and now they’re calling to close the gap between what AI told them and what they still need to know. The questions they ask in that 3-minute call map almost directly to the prompts they typed 10 minutes earlier.

Google reviews replace G2 — but the mining methodology is identical. Grant writes about looking for “but” statements, 3-4 star reviews, fit/non-fit language, and regret signals. That methodology transfers directly. A Google review that says “Great results, but I wasn’t prepared for how many visits the treatment plan called for” is structurally identical to a G2 review that says “Powerful tool, but the learning curve is steeper than expected.”

Reddit is a dead channel for local chiropractic. I scraped 9,323 Reddit rows. Zero mentions of any Bee Cave practice. And the broader chiropractic subreddits are actively hostile — anti-chiro sentiment is pervasive. In the study, Gemini (which ingests Reddit heavily) routes patients AWAY from chiropractors on open-ended health queries. Reddit is where chiropractic goes to get attacked, not recommended.

This is a significant departure from Grant’s framework, where Reddit is “one of the highest-signal sources for AEO-grade question mining.” For healthcare practitioners, Reddit is a minefield. The signal is real — patient concerns and fears are expressed openly — but the environment is adversarial. You mine it for language, not for engagement.

Grant’s “Canonical Answer” Applied to Healthcare

The strongest operational concept in Grant’s piece is the canonical answer — “the clearest explanation you have for a recurring uncertainty” that becomes the reference point across every surface.

In SaaS, canonical answers live on comparison pages, FAQ hubs, and AEO-optimized blog posts. One answer, many surfaces.

In chiropractic, the canonical answer framework maps onto something I’ve been calling the Publishability Hypothesis template. It looks like this for each condition a practice treats:

What is the condition (patient language, not clinical jargon)

What the research says (named studies, not “research shows”)

How we approach it (clinical reasoning, decision frameworks)

Typical timeline and reassessment criteria (”if no improvement by week 4, we do X”)

When to see someone else (referral pathways — the trust signal nobody builds)

Questions to ask any chiropractor about this condition

FAQ section with schema markup

That’s a canonical answer for a condition. It resolves Evaluation uncertainty (”is this practice right for my problem?”), Outcome uncertainty (”will this work?”), Process uncertainty (”what does this look like?”), and Safety/Trust uncertainty (”when do they admit their limits?”).

One page, four intent buckets addressed. One canonical answer, dozens of patient questions absorbed.

Grant would call this “creating a small number of answers so good they absorb dozens of questions.” That’s exactly what it is. And in the chiro study, the structural fingerprinting data confirmed that pages built this way — with high information gain, clinical reasoning, named citations, and decision frameworks — are dramatically more likely to be cited across all three AI models.

The Real Question: What Happens After Everyone Figures This Out?

Here’s where I want to push beyond both Grant’s framework and my own Phase 1 study.

Grant writes: “Ranking is no longer the moat. Clarity is.”

He’s right. Right now.

But clarity — like structure — eventually gets commoditized. When enough practices implement the Publishability template, when enough local businesses deploy FAQ schema, when enough agencies start building canonical answers using the question mining framework, clarity becomes table stakes.

Then what?

I think the moat evolves in three phases.

Phase 1 (now — 2026): Structure is the moat.

Nobody has it. Zero of 31 practice sites in the niche study have FAQ schema. Zero of 6 Tier 1 local practices have LocalBusiness schema. The structural audit scores range from 10 to 22 out of 35 — everyone is mediocre, nobody has invested. First movers win by implementing what Grant calls “the answer engine” — schema, FAQ blocks, clinical reasoning, template consistency, canonical answers distributed across surfaces.

This is the window. The competition for structured signals in healthcare is literally zero.

Phase 2 (~2027-2028): Structure becomes table stakes. Velocity matters.

Once the early adopters prove the model works and the fast followers start copying structural patterns, content velocity becomes the differentiator. Being able to deploy the Publishability template across 50 condition pages while a competitor is hand-crafting 3 is a real advantage. AI-assisted content production makes that velocity achievable. Grant’s “clustering → content → refresh” flywheel becomes the execution engine.

But velocity has a ceiling, and it’s the same ceiling that killed the old SEO content farm model: once everyone can produce structured content fast, the models stop rewarding volume and start rewarding something else.

Phase 3 (~2028+): Information provenance is the moat.

This is where it gets interesting.

Right now, AI models can’t easily distinguish between a chiropractor who wrote a sciatica page from personal clinical experience treating 500 sciatica patients, and a content agency that assembled the same page from PubMed abstracts and Healthline articles. Both pages can have named studies, decision frameworks, FAQ schema, comparison tables. Structurally identical. The models reward both equally because they can’t tell the difference.

But that’s a temporary blindness. Models are getting better at detecting synthetic content, and more importantly, they’re getting better at identifying unique information — claims, data points, clinical observations that don’t appear anywhere else in the training corpus.

When a chiropractor writes “in our practice, 73% of patients with acute lumbar disc herniations report >50% pain reduction within 3 weeks using our corrective protocol” — that’s a data point that cannot be synthesized from existing sources. It has to come from somewhere real.

That’s the moat after structure gets commoditized: proprietary clinical data embedded in structured content.

The practices that systematically track their own outcomes — which conditions they treat, what protocols they use, what timelines they observe, what percentage of patients improve by what measure — and publish that data in structurally engineered content will have something no content agency can fabricate and no competitor can copy.

It’s Grant’s information gain principle pushed to its logical conclusion: the highest possible information gain is data that literally doesn’t exist anywhere else.

How This Gets Built (Starting Today, Not in 2028)

The Publishability Hypothesis template from my study has a section called “How We Approach [Condition].” Right now, that section gets written from the practitioner’s clinical knowledge and philosophy. Useful, but replicable. Any practice with a similar philosophy could write something similar.

But imagine if that section said: “Across 127 patients we’ve treated for sciatica in the past 18 months, our average time to 50% improvement was 3.2 weeks using our corrective care protocol. Patients who completed the full protocol saw results approximately 37% faster than those who pursued relief care only.”

That’s uncopyable. No amount of content velocity from a competitor can produce that sentence unless they have their own 127 patients and their own outcome tracking.

And here’s the operational connection: the same Plaud workflow I described in the synthetic personas piece is the mechanism that makes this buildable. Patient recordings don’t just capture vocabulary for persona construction. They capture outcome patterns, clinical observations, and treatment timelines that become unique data points. “73% of our acute back pain patients report significant improvement within 3 weeks” — that statistic comes from systematically listening to and tracking what happens in the treatment room.

The practice that starts recording and tracking today isn’t just building content for 2026. It’s accumulating the proprietary dataset that becomes the Phase 3 moat. Every month the system runs, the data gets richer, the benchmarks get more precise, and the content gets harder to replicate. Every month it doesn’t, conversations disappear into the air instead of becoming content assets.

There’s a secondary moat that compounds with this: entity trust accumulation. The practices that have been consistently cited across models for 2-3 years build a compounding signal. Models develop contextual memory of reliable sources. Being cited today makes you more likely to be cited tomorrow, which makes you more likely to be cited next year. It’s the Matthew Effect applied to AI citations — the rich get richer, but only if the foundation was built early.

Grant’s Framework Applied to a Real Healthcare Market

Let me make this concrete with the data from the study.

Grant’s Answer Ownership System has six steps: Capture → Normalize → Qualify → Answer → Distribute → Refresh.

Here’s what that looks like for chiropractic:

Capture: The 178-question patient corpus IS the capture step — pulled from Reddit, PAA boxes, Perplexity suggestions, and podcast Q&As. The Plaud workflow adds a continuous capture layer from actual patient conversations.

Normalize: 178 raw questions collapsed to 98 non-redundant queries. “Is chiropractic safe,” “can a chiropractor hurt you,” “chiropractic stroke risk,” and “is it dangerous to crack your neck” all normalize to a single Fear/Safety cluster.

Qualify: The AEO filter Grant describes — does answering this reduce uncertainty, would it change how a recommendation is framed, can your brand credibly be cited? — maps directly onto the four intent buckets. Fear/Safety questions qualify immediately because they carry the highest decision consequence, the largest corpus share (33%), and zero competitive supply.

Answer: The Publishability Hypothesis template IS the canonical answer format for healthcare. Clinical reasoning, named studies, decision frameworks, reassessment criteria, referral pathways. One page per condition absorbs dozens of patient questions.

Distribute: The video content flywheel IS the distribution layer. One video → YouTube + blog post + FAQ schema + condition page embed + email capture. Same canonical answer, six surfaces. Plus schema markup that no competitor has attempted.

Refresh: This is where most healthcare practices fail. Content goes up and never gets updated. Grant’s warning about “explanation decay” applies directly. A sciatica page with 2019 research citations loses citation authority to a page with 2025 citations. The answer engine has to stay alive.

The full system — capture from patient conversations, normalize through question mining, qualify through the AEO filter, answer through the Publishability template, distribute through the video flywheel, refresh through ongoing outcome tracking — is the complete operational stack for healthcare AEO.

It doesn’t require a growth team. It doesn’t require AirOps or Gong or enterprise tooling. It requires a practitioner with a recording device, a camera, a video-to-blog pipeline, and the willingness to publish honest content about the questions patients are actually asking.

The barrier isn’t budget or technology. It’s the same barrier I keep finding: professional courage. The willingness to answer the questions nobody else will answer, with data nobody else is tracking, in language nobody else is using.

That’s the moat. Today, tomorrow, and in 2028.

This piece builds on the chiropractic AEO study (98 queries × 3 models × 25 practices), the video content flywheel analysis, and the synthetic persona framework. Josh Grant’s Definitive Guide to Question Mining is the framework I’m building on — read it for the full SaaS application and the Answer Ownership System.

Phase 2 of the chiropractic study — testing whether these frameworks actually produce measurable citation lift — is underway. Subscribe to follow the experiment.