I Ran the AEO Playbook on Chiropractic. The Results Broke My Assumptions.

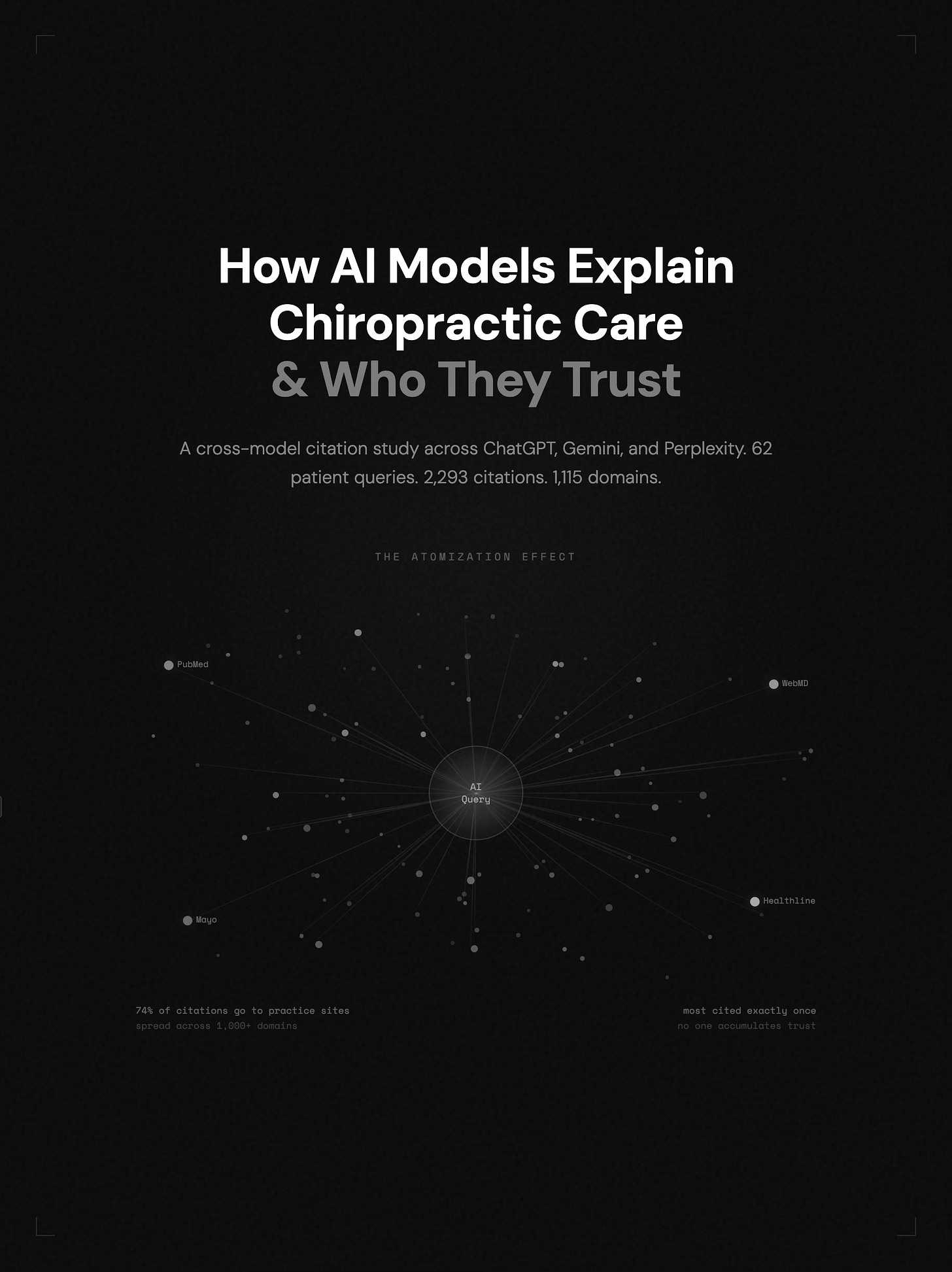

62 queries. 3 AI models. 2,293 citations. The first rigorous AEO study outside of SaaS — and what it means for every local practitioner on the internet.

If you work in SEO or marketing, you’ve probably read Josh Grant’s Definitive 2026 Guide to AEO. If you haven’t, stop here, go read it, and come back. It’s the operating manual for how AI search is replacing traditional discovery.

The core thesis is elegant: Models don’t rank content. They interpret it. The companies winning AI search aren’t the biggest — they’re the most interpretable. Webflow holds 1.2% CMS market share but appears in ~60% of AI-generated answers in their category. Structure beats authority. Interpretability beats volume.

But here’s the question nobody had tested: Does any of this work outside B2B SaaS?

Every AEO case study, every framework, every data point in the playbook comes from the same universe — tech companies with dedicated growth teams, six-figure content budgets, and platforms like AirOps and Profound to operationalize the whole thing.

What about the chiropractor in Charleston with a WordPress site and a $200/month marketing budget? What about the physical therapist, the dentist, the optometrist? The millions of local healthcare practitioners whose patients are right now asking AI models for health guidance before they ever search for a provider?

I decided to find out.

The Study: What I Actually Did

I designed what I think is the first rigorous AEO analysis of a healthcare practitioner vertical. Not “I asked ChatGPT one question” — a (hopefully) replicable study with published data, documented methodology, and falsifiable predictions.

Here’s the scope:

62 queries built from real patient language — pulled from Reddit threads, People Also Ask expansions, chiropractic podcast Q&As, and Perplexity trend data. Organized into 20 thematic clusters covering everything from “Is chiropractic safe during pregnancy?” to “Is my chiropractor scamming me?”

3 AI models — ChatGPT (GPT-4o), Google Gemini, and Perplexity — each query submitted with identical phrasing, every citation logged.

2,293 total citations cataloged across 1,115 unique domains. Every URL classified by asset type: practice site, medical authority, UGC platform, academic, industry association.

Structural fingerprinting of the 20 most-cited practice pages and 11 non-cited practice sites, scored on a content engineering rubric adapted from the AEO Playbook.

Ahrefs authority analysis of 112 domains — Domain Rating, organic traffic, referring domains, backlinks — correlated against citation frequency.

Full study with all data tables and methodology appendix here →

I went in expecting the playbook to mostly validate. What I found was more interesting than validation.

Finding #1: Practice Sites Aren’t Invisible. They’re Atomized.

This was the first surprise.

When I applied the AEO Playbook’s frameworks to healthcare practitioners, I expected the playbook’s general principle — that only the most interpretable sources get cited — would predict that practice sites would be nearly invisible, with medical authorities like WebMD and Mayo Clinic dominating and practice sites appearing rarely or never.

That’s not what happened.

Practice sites collectively received 74.2% of all citations — 1,701 out of 2,293. They’re not invisible at all. They’re the majority of what AI models cite for chiropractic queries.

But here’s the catch: those 1,701 citations are scattered across 1,041 different domains.

66.8% of practice domains were cited exactly once

Only 3.7% were cited five or more times

Only 0.7% — seven sites total — were cited ten or more times

Only 51 domains (4.6%) were cited by all three models

I’m calling this The Practitioner Atomization Effect. Models aren’t ignoring practice sites. They’re sampling randomly from a commodity pool of 1,000+ interchangeable blogs. Every practice gets a turn. No practice gets authority.

This changes the strategic question entirely. The AEO Playbook frames the problem as “how do we become visible?” For practitioners, the real problem is: how do we escape the commodity pool?

Finding #2: Domain Authority Is Irrelevant. Truly, Measurably Irrelevant.

This one should make every SEO professional uncomfortable.

I pulled Ahrefs data for 112 domains in the citation dataset and ran Pearson correlations between traditional authority metrics and AI citation frequency. To control for the medical-authority category, I analyzed practice sites only (n = 77). The results were unambiguous: Domain Rating shows only a weak correlation with citations (r = 0.197), referring domains are similarly weak (r = 0.147), and organic traffic is effectively unrelated (r = 0.077).

For context, a correlation of 0.077 means organic traffic explains less than 1% of the variance in AI citation behavior. Domain Rating — the metric most SEO strategies are built around — explains less than 4%.

The outliers make the pattern impossible to ignore:

theglenchiro.com — Domain Rating 1. ~285 monthly visitors. 47 referring domains. Cited 7 times across all three models.

painfreecharleston.com — Domain Rating 3. ~151 monthly visitors. Cited 5 times across three models.

Meanwhile, sites with DR 40+ and thousands of referring domains were cited once — or not at all.

Josh Grant’s playbook says “Interpretability > Authority.”

This data doesn’t just support that claim — it quantifies it.

The metric that built the SEO industry has near-zero predictive value for AI citation (r = 0.077).

Finding #3: The Schema Desert

This might be the most actionable finding in the entire study.

I audited 31 practice sites for schema markup — the structured data that the AEO Playbook identifies as “the blueprint models use to understand you.” FAQ Schema, HowTo Schema, MedicalCondition Schema, Article Schema.

Zero out of 31 sites had any of it.

Not the cited sites. Not the non-cited sites. Nobody. A complete structural vacuum.

The AEO Playbook’s FAQ Schema Accelerator drove +331 new AI citations for Webflow — a 57% increase — on six pages. In chiropractic, not a single practice has even attempted this play. The competition for schema-driven AI visibility in this vertical is literally zero.

Finding #4: Information Gain Is the Only Thing That Matters

When I fingerprinted cited versus non-cited practice sites using the content engineering rubric, one dimension completely dominated the rest: information gain.

Cited sites scored dramatically higher on information gain (3.75 vs. 1.30 on a 5-point scale), creating a 2.45-point gap — more than double the separation of any other dimension. Structured answers, tables and lists, structural consistency, and content depth all showed modest differences (gaps ranging from 1.35 to 1.90), but none came close to the explanatory power of information gain.

In other words, formatting helps — but it doesn’t decide.

Information gain asks a simple question: does this page give the model something it couldn’t have generated from its training data alone? On that dimension, cited pages were in a different class entirely.

In the AEO Playbook, Josh describes this as “content that provides clarity, structure, specificity, or decision-useful reasoning beyond the generic summaries models already know.”

In chiropractic, the highest-information-gain pages followed a clear pattern: they published clinical reasoning, not clinical claims.

The Publishability Hypothesis: Reasoning, Not Claims

This is the novel framework that emerged from the data.

Most chiropractic blogs say things like: “Chiropractic care can help with disc herniations.” That’s a clinical claim.

An LLM can generate that sentence from training data. Zero information gain. It’s also regulatory risk, liability exposure, and trivially copyable by competitors. This is what 1,000+ practice sites in the commodity pool all publish.

The sites that escaped the commodity pool did something different. They published clinical reasoning:

“When evaluating a patient with an L4-L5 disc herniation, evidence-based chiropractors consider several factors. A 2010 JMPT systematic review showed moderate evidence for short-term improvement in acute disc herniation with radiculopathy. The ACP 2017 guideline recommends spinal manipulation as first-line for acute LBP. In our practice, we reassess functional progress at 2-week intervals using the Oswestry Disability Index, and if measurable improvement hasn’t occurred by 4-6 weeks, we discuss referral options including PT co-management or orthopedic consultation.”

That version cites specific research (connecting to the medical literature models trust). It shows clinical judgment an LLM can’t fabricate. It contains if/then decision logic models can extract and reuse. It names specific outcome measures. It includes referral criteria that signal scope-of-practice awareness. And it creates zero liability — it describes a process, not a promise.

I’m calling this The Publishability Hypothesis: In healthcare practitioner verticals, the content most likely to escape citation atomization publishes clinical reasoning rather than clinical claims. This content is simultaneously: the highest information gain, the most legally defensible, the hardest for competitors to replicate, and the most structurally aligned with what AI models extract and reuse.

The professional courage required to publish this content is the competitive moat.

The Three-Layer Trust Hierarchy

The citation data revealed a consistent trust architecture across all three models:

Layer 1 — The Science: PubMed, JAMA, Cochrane Reviews. Establishes whether chiropractic works for a given condition. Models trust this absolutely but never cite it to patients directly.

Layer 2 — The Translators: Healthline, Mayo Clinic, Cleveland Clinic, WebMD. Turns research into patient-readable guidance. This is where 18.2% of citations concentrate across just 59 domains — high trust, high concentration.

Layer 3 — The Practitioners: 1,041 individual practice blogs. Rewrites what Layer 2 already said, worse. This is where 74.2% of citations scatter across the atomized commodity pool.

The gap: Nobody bridges Layer 1 and Layer 3. Healthline bridges Layers 1→2 but isn’t a practitioner. Practice blogs attempt 2→3 but just dilute what Healthline already did better. The first practice that bridges 1→3 directly — citing the research, showing clinical reasoning, structured for AI extraction — occupies a position nobody else holds.

Model Differences: Gemini Is the Skeptic

Not all models treat chiropractic the same:

GPT was the most generous to practice sites — 1,453 citations, 75.2% going to practices, moderate UGC lean (4.4% Reddit/YouTube).

Perplexity leaned hardest on UGC — 618 citations, 7.8% going to Reddit and YouTube (nearly double GPT’s rate), consistent with the playbook’s prediction about earned answer surfaces.

Gemini was the skeptic — only 222 citations total, but 24.3% went to medical authority sites (compared to ~17.5% for the other two). Gemini applies roughly 43% more weight to institutional medical trust. For a profession that already faces legitimacy questions, Gemini’s YMYL filter is the tightest gate. Gemini also gave the highest number of zero citation answers— 8/62.

Cross-model citation — being cited by all three — is extremely rare. Only 51 domains out of 1,115 achieved it. That’s 4.6%. Being cited by one model doesn’t mean anything. Being cited by three means you’ve genuinely escaped the noise.

What the AEO Playbook Got Right (and What Needs Adapting)

Validated:

Interpretability > Authority — confirmed, r = 0.077-0.197

Content engineering predicts citation — cited sites scored 27/40 vs. 15.5/40 for non-cited

Schema is table-stakes — and in chiropractic, the table is completely empty

Earned answers matter — Perplexity leans on Reddit/YouTube exactly as predicted

Needs adaptation for healthcare:

“Invisible" should be "atomized" — My initial hypothesis predicted practice sites would be invisible, but they actually get cited at scale (74.2% of all citations), just scattered across 1,041 domains without concentration.

Brand entity recognition doesn’t exist for individual practices — no practice has cross-surface entity presence

YMYL creates a trust multiplier — Gemini’s 43% heavier medical authority weight means healthcare practices face a higher structural bar than SaaS companies

Novel contributions:

The Publishability Hypothesis — reasoning vs. claims as the information gain framework for healthcare

The Three-Layer Trust Hierarchy — and the Layer 1→3 bridge gap as the strategic opportunity

The Practitioner Atomization Effect — a new mechanism distinct from invisibility

What’s Next: Testing the Hypothesis

Phase 1 proved the patterns exist. Phase 2 will test whether they can be changed.

The plan: take 1-3 chiropractic practice sites currently in the commodity pool. Restructure their condition pages using the reasoning-based framework — evidence citations, decision logic, reassessment criteria, referral pathways. Add FAQ, HowTo, and MedicalCondition schema (entering the Schema Desert with zero competition). Build template consistency across all condition pages.

Wait 4-8 weeks for models to re-crawl. Then re-run the exact same 62-query set across all three models and measure citation lift.

If a site goes from 1 citation to 5+ across multiple models, the hypothesis holds — and we’ll have the first documented before/after AEO intervention for a healthcare practitioner site.

If it doesn’t work, that’s equally publishable. The AEO community is still building its evidence base. Honest data from a new vertical is valuable regardless of what it shows.

If You Run a Chiropractic Practice: Five Things You Can Do This Month

1. Implement FAQ Schema on your top 5 condition pages. Nobody in your vertical has done this. The competition is zero. Use Google’s Rich Results Test to validate. This is the closest thing to a free lunch in AEO.

2. Rewrite one condition page using reasoning, not claims. Pick your highest-traffic condition page. Cite specific studies (link to PubMed). Show your decision framework. Include reassessment criteria. Add when-to-refer-out guidance. Make the page something an LLM can’t generate from training data.

3. Build template consistency. Use the same heading structure, the same section types, across every condition page. Models latch onto predictable patterns. If your sciatica page and your disc herniation page use the same structural template, models generalize trust from one to the other.

4. Write the page nobody in your profession wants to write. “Is chiropractic pseudoscience?” has 4,700 monthly searches. “Normal treatment plan vs. billing scam” has zero SERP presence. “Did my chiropractor hurt me?” has the highest emotional urgency of any query in the study. The first practice to answer these honestly — with evidence, with nuance, with scope-of-practice awareness — owns a position nobody else has the courage to occupy.

5. Stop worrying about Domain Rating. Your DR doesn’t matter. A site with DR 1 got cited 7 times across 3 models. A site with DR 40+ got cited once. The metric that matters is information gain — does your page contain something an AI model can’t already say?

The Bigger Picture

Every finding in this study likely generalizes beyond chiropractic. Physical therapists, dentists, optometrists, mental health counselors — every local healthcare practitioner vertical probably shares the same patterns: atomized citation pools, schema deserts, commodity content, and a Layer 1→3 bridge gap that nobody has filled.

The AEO Playbook was built for companies with growth teams and enterprise tooling. But the principles — interpretability over authority, structure over volume, information gain over keyword density — apply just as powerfully to a solo practitioner with a WordPress site (Well, maybe not WordPress…It’s 2026! Upgrade to Framer!). The operational mechanics differ. The physics are identical.

The first practice in any healthcare vertical to implement evidence-cited, structurally-engineered content with schema markup is playing a game with no competitors.

That game starts now.

The full Phase 1 study with all data tables, methodology appendix, and scoring rubrics is available here. The raw data — all 2,293 citations, 62 queries, structural fingerprint scorecards, and Ahrefs authority analysis — is published for anyone to replicate or challenge.

Phase 2 results will be published as they come in. Subscribe to follow the experiment.